computers

Past, Present, and the Future

|

|

|

|

|

A computers is a machine that manipulates data according to a list of instructions.

Although mechanical examples of computers have existed throughout history, the first resembling a modern computer were developed in the mid-20th century (1940–1945). The first electronic computers were the size of a large room, consuming as much power as several hundred modern personal computers. Modern computers based on tiny circuits are millions to billions of times more capable than the early machines, and occupy a fraction of the space. Simple computers are small enough to fit into a wristwatch, and can be powered by a watch battery. personal computers in their various forms are icons of the information age, what most people think of as a "computer", but the embedded computers found in devices ranging from fitter aircraft to industrial robots, digital camera, and children toys are the most numerous. The ability to store and execute lists of instructions called programs makes computers extremely versatile, distinguishing them from calculators. The first use of the word "computer" was recorded in 1613, referring to a person who carried out calculations, or computations, and the word continued to be used in that sense until the middle of the 20th century. From the end of the 19th century onwards though, the word began to take on its more familiar meaning, describing a machine that carries out computations. The history of the modern computer begins with two separate technologies, automated calculation and programmability. Examples of early mechanical calculating devices include the abacus, the slide rule and arguably the astrolabe. In 1837, Charles Babbage was the first to conceptualize and design a fully programmable mechanical computer, his analytical engine. Limited finances and Babbage's inability to resist tinkering with the design meant that the device was never completed. In the late 1880s Herman Hollerith invented the recording of data on a machine readable medium. Prior uses of machine readable media, above, had been for control, not data. "After some initial trials with paper tape, he settled on punched cards" To process these punched cards he invented the tabulator, and the key punched machines. These three inventions were the foundation of the modern information processing industry. Large-scale automated data processing of punched cards was performed for the 1890 United States Census by Hollerith's company, which later became the core of IBM. During the first half of the 20th century, many scientific computing needs were met by increasingly sophisticated analog computers, which used a direct mechanical or electrical model of the problem as a basis for computation. However, these were not programmable and generally lacked the versatility and accuracy of modern digital computers. George Stibitz is internationally recognized as a father of the modern digital computer. While working at Bell Labs in November of 1937, Stibitz invented and built a relay-based calculator he dubbed the "Model K" (for "kitchen table", on which he had assembled it), which was the first to use binary circuits to perform an arithmetic compute. Later models added greater sophistication including complex arithmetic and programmability

A succession of steadily more powerful and flexible computing devices were constructed in the 1930s and 1940s, gradually adding the key features that are seen in modern computers. The use of digital electronics (largely invented by Claude Shannon in 1937) and more flexible programmability were vitally important steps, but defining one point along this road as "the first digital electronic computer" is difficult (Shannon 1940). Notable achievements include:

.jpg)

- Konrad Zuse electromechanical "Z machines". The Z3 (1941) was the first working machine featuring binary arithmetic, including floating point arithmetic and a measure of programmability. In 1998 the Z3 was proved to be Turing complete, therefore being the world's first operational computer.

- The non-programmable Atanasoff Berry Computer (1941) which used vacuum tube based computation, binary numbers, and regenerative capacitor memory. The use of regenerative memory allowed it to be much more compact then its peers (being approximately the size of a large desk or workbench), since intermediate results could be stored and then fed back into the same set of computation elements.

- The secret British Colossus computers (1943), which had limited programmability but demonstrated that a device using thousands of tubes could be reasonably reliable and electronically reprogrammable. It was used for breaking German wartime codes.

- The Harvard Mark I(1944), a large-scale electromechanical computer with limited programmability.

- The U.S. Army's Ballistics Research laboratory ENIAC(1946), which used decimal arithmetic and is sometimes called the first general purpose electronic computer (since Konrad Zuse Z3 of 1941 used electromagnets instead of electronics). Initially, however, ENIAC had an inflexible architecture which essentially required rewiring to change its programming.

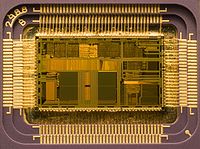

Computers using vacuum tubes as their electronic elements were in use throughout the 1950s, but by the 1960s had been largely replaced by span> transistor based machines, which were smaller, faster, cheaper to produce, required less power, and were more reliable. The first transistorized computer was demonstrated at the University of Manchester in 1953. In the 1970s, integrated circuit technology and the subsequent creation of microprocessor, such as the Intel 4004, further decreased size and cost and further increased speed and reliability of computers. By the 1980s, computers became sufficiently small and cheap to replace simple mechanical controls in domestic appliances such as washing machines. The 1980s also witnessed home computers and the now ubiquitous personal computers. With the evolution of the Internet, personal computers are becoming as common as the television and the telephone in the household.

Modern Smartphone are fully-programmable computers in their own right, and as of 2009 may well be the most common form of such computers in existence.

In practical terms, a computer program may run from just a few instructions to many millions of instructions, as in a program for a word processor or a web browser. A typical modern computer can execute billions of instructions per second (gigahertz or GHz) and rarely make a mistake over many years of operation. Large computer programs consisting of several million instructions may take teams of programmers years to write, and due to the complexity of the task almost certainly contain errors.

Errors in computer programs are called "bugs". Bugs may be benign and not affect the usefulness of the program, or have only subtle effects. But in some cases they may cause the program to "hang"—become unresponsive to input such as mouse clicks or keystrokes, or to completely fail or "crash". Otherwise benign bugs may sometimes may be harnessed for malicious intent by an unscrupulous user writing an "exploit" code designed to take advantage of a bug and disrupt a program's proper execution. Bugs are usually not the fault of the computer. Since computers merely execute the instructions they are given, bugs are nearly always the result of programmer error or an oversight made in the program's design.

While it is possible to write computer programs as long lists of numbers or (machine language) and this technique was used with many early computers, it is extremely tedious to do so in practice, especially for complicated programs. Instead, each basic instruction can be given a short name that is indicative of its function and easy to remember a mnemonic such as ADD, SUB, MULT or JUMP. These mnemonics are collectively known as a computer's assembly language. Converting programs written in assembly language into something the computer can actually understand (machine language) is usually done by a computer program called an assembler. Machine languages and the assembly languages that represent them (collectively termed low level program language) tend to be unique to a particular type of computer. For instance, an Arm architecture computer (such as may be found in a PDA or a hand held video game) cannot understand the machine language of an Intel Pentium or the AMD computer that might be in a PC.

While a computer may be viewed as running one gigantic program stored in its main memory, in some systems it is necessary to give the appearance of running several programs simultaneously. This is achieved by multitasking i.e. having the computer switch rapidly between running each program in turn. One means by which this is done is with a special signal called an interrupt which can periodically cause the computer to stop executing instructions where it was and do something else instead. By remembering where it was executing prior to the interrupt, the computer can return to that task later. If several programs are running "at the same time", then the interrupt generator might be causing several hundred interrupts per second, causing a program switch each time. Since modern computers typically execute instructions several orders of magnitude faster than human perception, it may appear that many programs are running at the same time even though only one is ever executing in any given instant. This method of multitasking is sometimes termed "time-sharing" since each program is allocated a "slice" of time in turn. Before the era of cheap computers, the principle use for multitasking was to allow many people to share the same computer. Seemingly, multitasking would cause a computer that is switching between several programs to run more slowly - in direct proportion to the number of programs it is running. However, most programs spend much of their time waiting for slow input/output devices to complete their tasks. If a program is waiting for the user to click on the mouse or press a key on the keyboard, then it will not take a "time slice" until the event it is waiting for has occurred. This frees up time for other programs to execute so that many programs may be run at the same time without unacceptable speed loss.

In the 1970s, computer engineers at research institutions throughout the United States began to link their computers together using telecommunications technology. This effort was funded by ARPA (now DARPA), and the computer network that it produced was called the ARPANET. The technologies that made the Arpanet possible spread and evolved.

In time, the network spread beyond academic and military institutions and became known as the Internet. The emergence of networking involved a redefinition of the nature and boundaries of the computer. Computer operating systems and applications were modified to include the ability to define and access the resources of other computers on the network, such as peripheral devices, stored information, and the like, as extensions of the resources of an individual computer. Initially these facilities were available primarily to people working in high-tech environments, but in the 1990s the spread of applications like e-mail and the WWW, combined with the development of cheap, fast networking technologies like Ethernet and ADSL saw computer networking come almost ubiquitous. In fact, the number of computers that are networked is growing phenomenally. A very large proportion of personal computers regularly connect to the Internet to communicate and receive information. "Wireless" networking, often utilizing mobile phone networks, has meant networking is becoming increasingly ubiquitous even in mobile computing environments. The task of developing large software systems presents a significant intellectual challenge. Producing software with an acceptably high reliability within a predictable schedule and budget has historically been difficult; the academic and professional discipline of software engineering concentrates specifically on this problem.